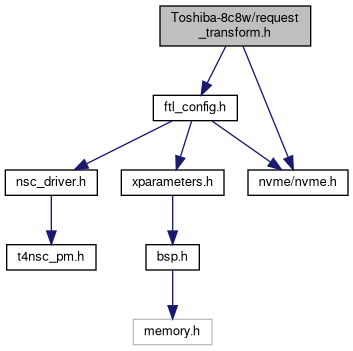

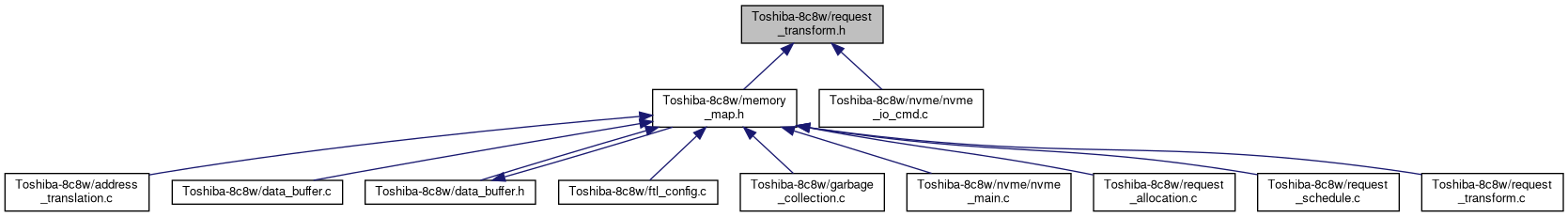

Go to the source code of this file.

Data Structures | |

| struct | _ROW_ADDR_DEPENDENCY_ENTRY |

| The dependency info of this physical block. More... | |

| struct | _ROW_ADDR_DEPENDENCY_TABLE |

| The row address dependency table for all the user blocks. More... | |

Macros | |

| #define | NVME_COMMAND_AUTO_COMPLETION_OFF 0 |

| #define | NVME_COMMAND_AUTO_COMPLETION_ON 1 |

| #define | ROW_ADDR_DEPENDENCY_CHECK_OPT_SELECT 0 |

| #define | ROW_ADDR_DEPENDENCY_CHECK_OPT_RELEASE 1 |

| #define | BUF_DEPENDENCY_REPORT_BLOCKED 0 |

| #define | BUF_DEPENDENCY_REPORT_PASS 1 |

| #define | ROW_ADDR_DEPENDENCY_REPORT_BLOCKED 0 |

| #define | ROW_ADDR_DEPENDENCY_REPORT_PASS 1 |

| #define | ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_DONE 0 |

| #define | ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_SYNC 1 |

| #define | ROW_ADDR_DEP_ENTRY(iCh, iWay, iBlk) (&rowAddrDependencyTablePtr->block[(iCh)][(iWay)][(iBlk)]) |

Typedefs | |

| typedef struct _ROW_ADDR_DEPENDENCY_ENTRY | ROW_ADDR_DEPENDENCY_ENTRY |

| The dependency info of this physical block. More... | |

| typedef struct _ROW_ADDR_DEPENDENCY_ENTRY * | P_ROW_ADDR_DEPENDENCY_ENTRY |

| typedef struct _ROW_ADDR_DEPENDENCY_TABLE | ROW_ADDR_DEPENDENCY_TABLE |

| The row address dependency table for all the user blocks. More... | |

| typedef struct _ROW_ADDR_DEPENDENCY_TABLE * | P_ROW_ADDR_DEPENDENCY_TABLE |

Functions | |

| void | InitDependencyTable () |

| void | ReqTransNvmeToSlice (unsigned int cmdSlotTag, unsigned int startLba, unsigned int nlb, unsigned int cmdCode) |

| Split NVMe command into slice requests. More... | |

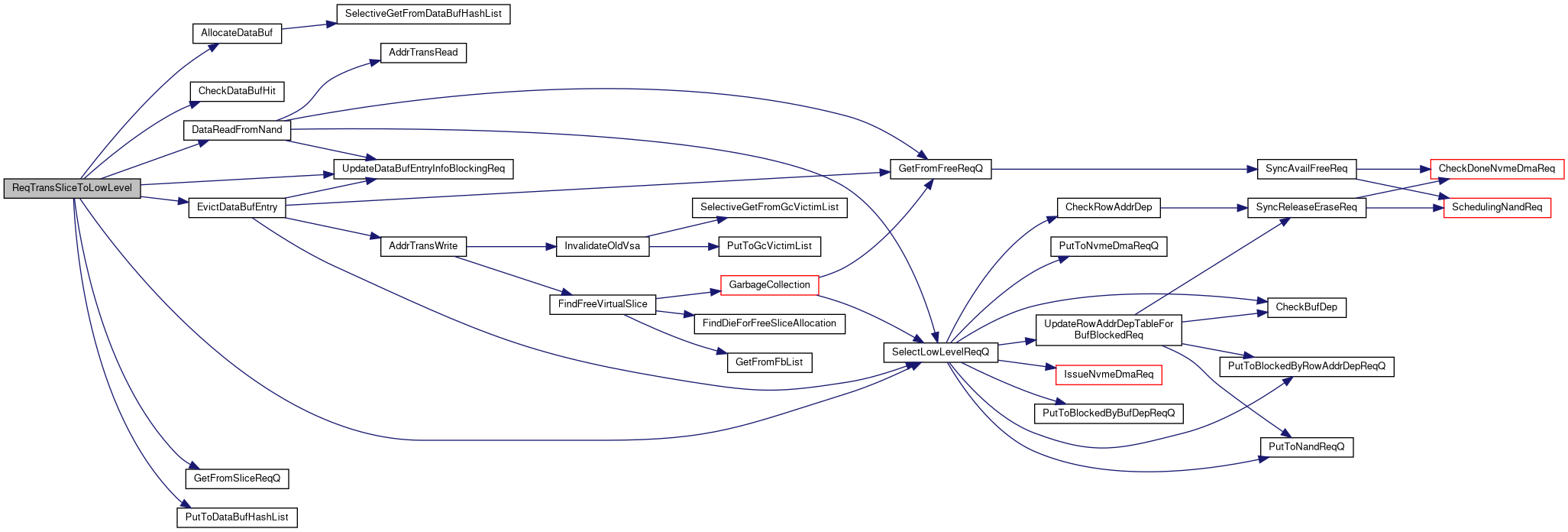

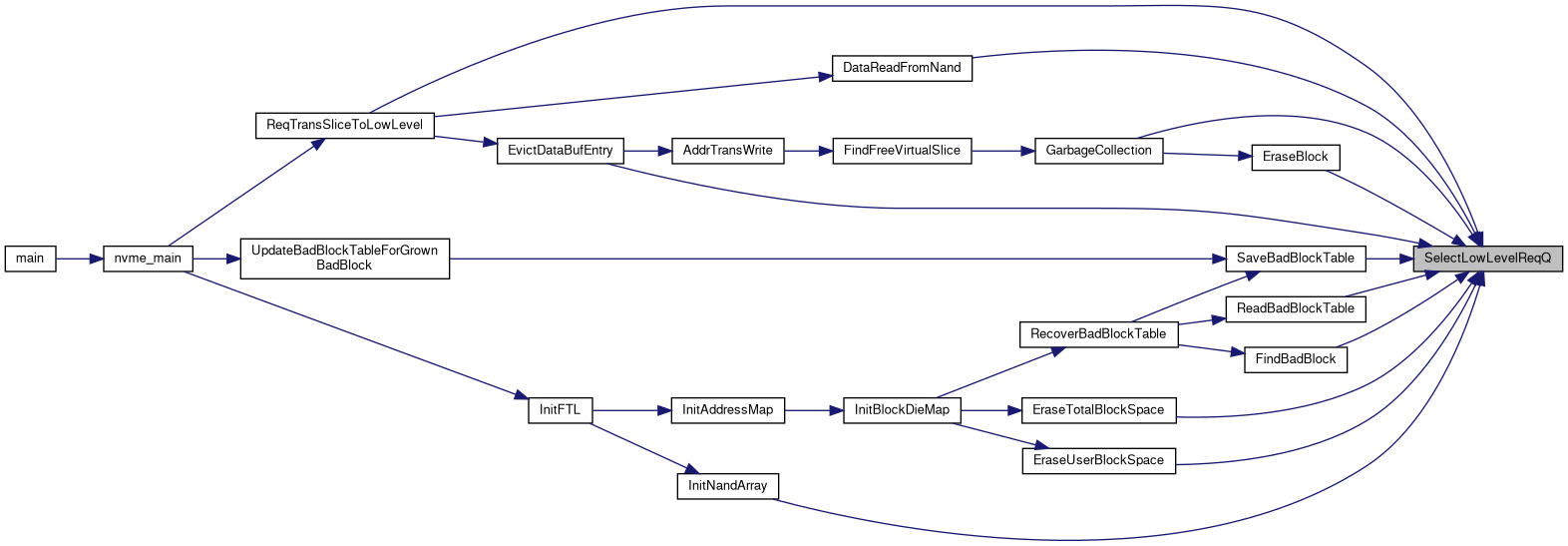

| void | ReqTransSliceToLowLevel () |

| Data Buffer Manager. Handle all the pending slice requests. More... | |

| void | IssueNvmeDmaReq (unsigned int reqSlotTag) |

| Allocate data buffer for the specified DMA request and inform the controller. More... | |

| void | CheckDoneNvmeDmaReq () |

| void | SelectLowLevelReqQ (unsigned int reqSlotTag) |

| Dispatch given NVMe/NAND request to corresponding request queue. More... | |

| void | ReleaseBlockedByBufDepReq (unsigned int reqSlotTag) |

| Pop the specified request from the buffer dependency queue. More... | |

| void | ReleaseBlockedByRowAddrDepReq (unsigned int chNo, unsigned int wayNo) |

| Update the row address dependency of all the requests on the specified die. More... | |

Variables | |

| P_ROW_ADDR_DEPENDENCY_TABLE | rowAddrDependencyTablePtr |

Macro Definition Documentation

◆ BUF_DEPENDENCY_REPORT_BLOCKED

| #define BUF_DEPENDENCY_REPORT_BLOCKED 0 |

Definition at line 58 of file request_transform.h.

◆ BUF_DEPENDENCY_REPORT_PASS

| #define BUF_DEPENDENCY_REPORT_PASS 1 |

Definition at line 59 of file request_transform.h.

◆ NVME_COMMAND_AUTO_COMPLETION_OFF

| #define NVME_COMMAND_AUTO_COMPLETION_OFF 0 |

Definition at line 52 of file request_transform.h.

◆ NVME_COMMAND_AUTO_COMPLETION_ON

| #define NVME_COMMAND_AUTO_COMPLETION_ON 1 |

Definition at line 53 of file request_transform.h.

◆ ROW_ADDR_DEP_ENTRY

| #define ROW_ADDR_DEP_ENTRY | ( | iCh, | |

| iWay, | |||

| iBlk | |||

| ) | (&rowAddrDependencyTablePtr->block[(iCh)][(iWay)][(iBlk)]) |

Definition at line 112 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_CHECK_OPT_RELEASE

| #define ROW_ADDR_DEPENDENCY_CHECK_OPT_RELEASE 1 |

Definition at line 56 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_CHECK_OPT_SELECT

| #define ROW_ADDR_DEPENDENCY_CHECK_OPT_SELECT 0 |

Definition at line 55 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_REPORT_BLOCKED

| #define ROW_ADDR_DEPENDENCY_REPORT_BLOCKED 0 |

Definition at line 61 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_REPORT_PASS

| #define ROW_ADDR_DEPENDENCY_REPORT_PASS 1 |

Definition at line 62 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_DONE

| #define ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_DONE 0 |

Definition at line 64 of file request_transform.h.

◆ ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_SYNC

| #define ROW_ADDR_DEPENDENCY_TABLE_UPDATE_REPORT_SYNC 1 |

Definition at line 65 of file request_transform.h.

Typedef Documentation

◆ P_ROW_ADDR_DEPENDENCY_ENTRY

| typedef struct _ROW_ADDR_DEPENDENCY_ENTRY * P_ROW_ADDR_DEPENDENCY_ENTRY |

◆ P_ROW_ADDR_DEPENDENCY_TABLE

| typedef struct _ROW_ADDR_DEPENDENCY_TABLE * P_ROW_ADDR_DEPENDENCY_TABLE |

◆ ROW_ADDR_DEPENDENCY_ENTRY

| typedef struct _ROW_ADDR_DEPENDENCY_ENTRY ROW_ADDR_DEPENDENCY_ENTRY |

The dependency info of this physical block.

To ensure the integrity of the data will not affected by the scheduler, the fw should maintain some information for each flash block to avoid situations such as the erase request was being reorderd before the read requests.

- Note

- important for scheduling

◆ ROW_ADDR_DEPENDENCY_TABLE

| typedef struct _ROW_ADDR_DEPENDENCY_TABLE ROW_ADDR_DEPENDENCY_TABLE |

The row address dependency table for all the user blocks.

- See also

ROW_ADDR_DEPENDENCY_ENTRY.

Function Documentation

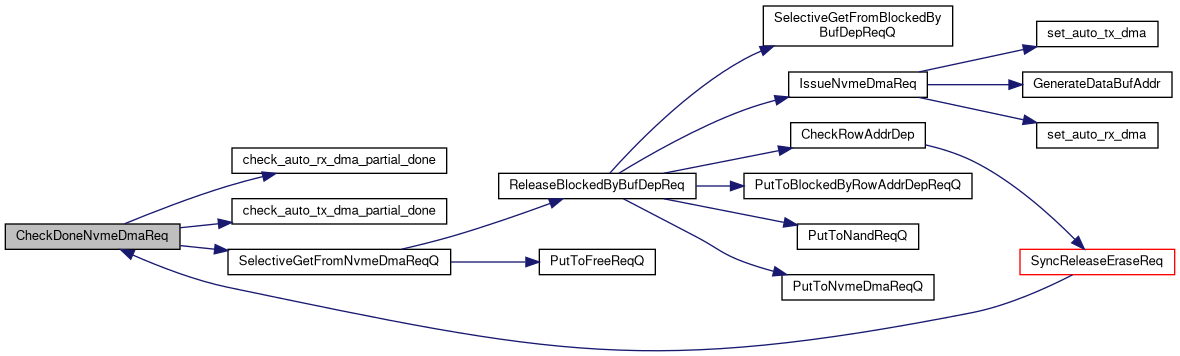

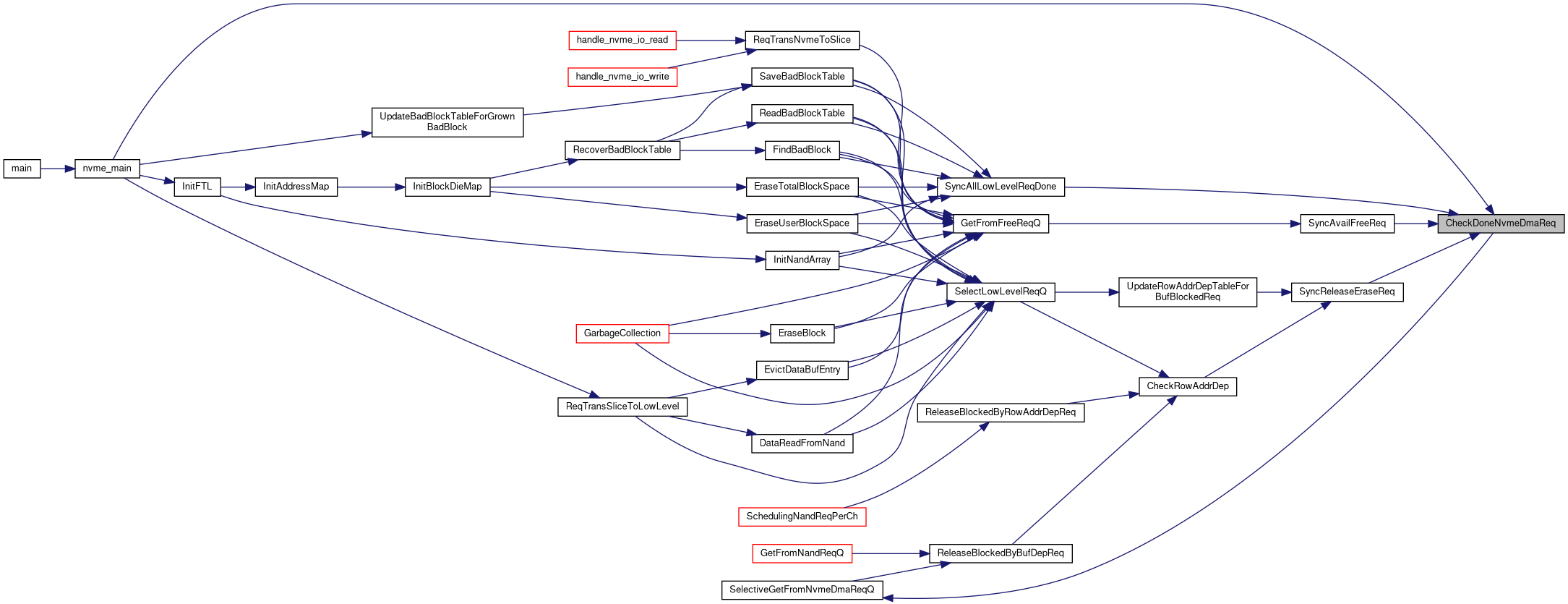

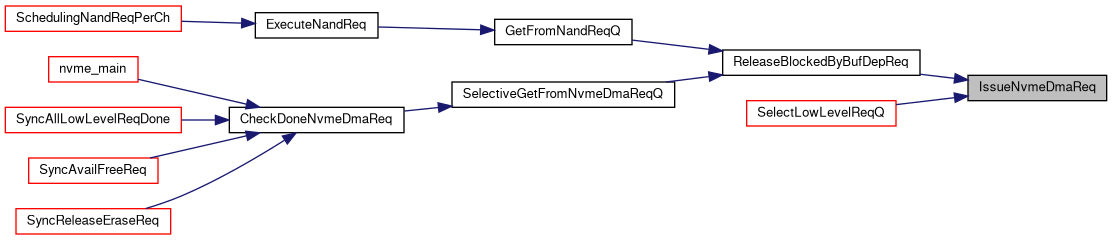

◆ CheckDoneNvmeDmaReq()

| void CheckDoneNvmeDmaReq | ( | ) |

Definition at line 918 of file request_transform.c.

◆ InitDependencyTable()

| void InitDependencyTable | ( | ) |

Definition at line 58 of file request_transform.c.

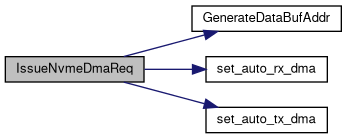

◆ IssueNvmeDmaReq()

| void IssueNvmeDmaReq | ( | unsigned int | reqSlotTag | ) |

Allocate data buffer for the specified DMA request and inform the controller.

This function is used for issuing a new DMA request, the DMA procedure can be split into 2 steps:

- Prepare a buffer based on the member

dataBufFormatof the specified DMA request Inform NVMe controller

For a DMA request, it might want to rx/tx a data whose size is larger than 4K which is the NVMe block size, so the firmware need to inform the NVMe controller for each NVMe block.

The tail reg of the DMA queue will be updated during the

set_auto_rx_dma()andset_auto_tx_dma(), so we need to update thenvmeDmaInfo.reqTailafter issuing the DMA request.

- Warning

- For a DMA request, the buffer address generated by

GenerateDataBufAddr()is chosen based on theREQ_OPT_DATA_BUF_ENTRY, however, since the size of a data entry isBYTES_PER_DATA_REGION_OF_SLICE(default 4), will the data buffer used by the DMA request overlap with other requests' data buffer if thenumOfNvmeBlockof the DMA request is larger thanNVME_BLOCKS_PER_PAGE?

- Parameters

-

reqSlotTag the request pool index of the given request.

Definition at line 878 of file request_transform.c.

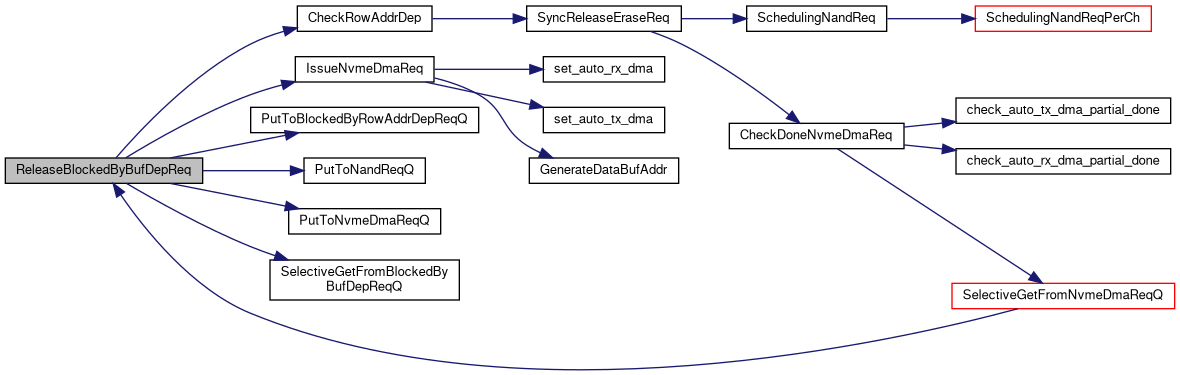

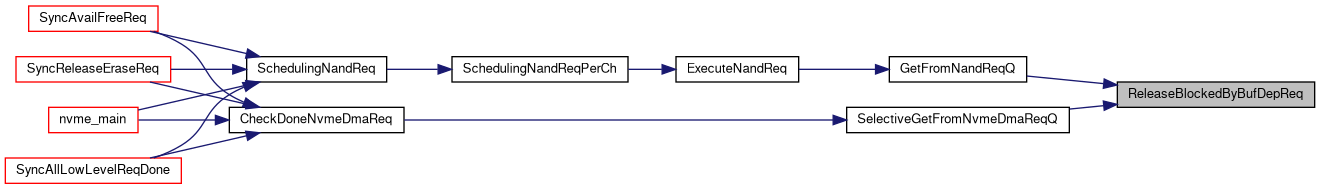

◆ ReleaseBlockedByBufDepReq()

| void ReleaseBlockedByBufDepReq | ( | unsigned int | reqSlotTag | ) |

Pop the specified request from the buffer dependency queue.

In the current implementation, this function is only called after the specified request entry is moved to the free request queue, which means that the previous request has released the data buffer entry it occupied. Therefore, we now need to update the relevant information about the data buffer dependency.

- Warning

- Only the NAND requests with VSA can use this function.

-

Since the struct

DATA_BUF_ENTRYmaintains only the tail of blocked requests, the specified request should be the head of blocked requests to ensure that the request order is not messed up.

- Parameters

-

reqSlotTag The request entry index of the given request

Definition at line 729 of file request_transform.c.

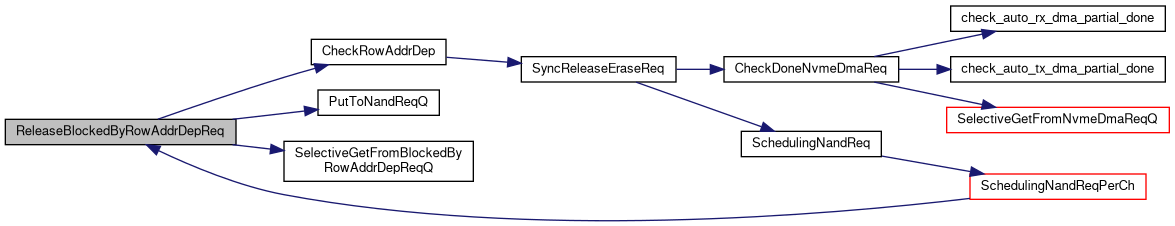

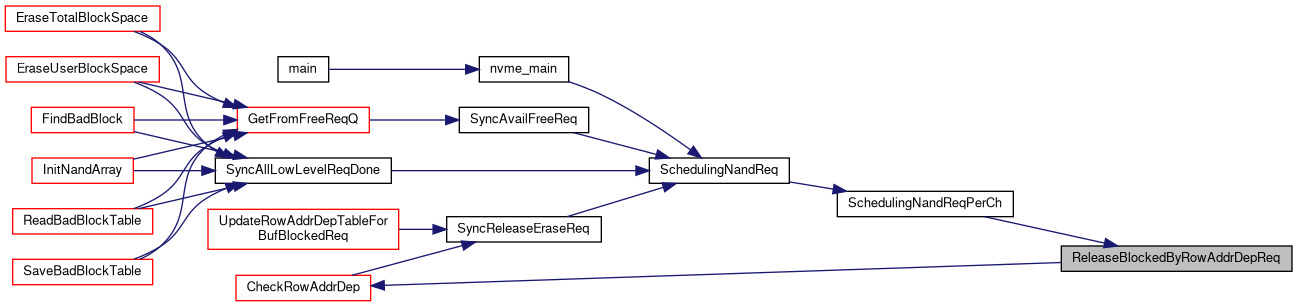

◆ ReleaseBlockedByRowAddrDepReq()

| void ReleaseBlockedByRowAddrDepReq | ( | unsigned int | chNo, |

| unsigned int | wayNo | ||

| ) |

Update the row address dependency of all the requests on the specified die.

Traverse the blockedByRowAddrDepReqQ of the specified die, and then recheck the row address dependency for all the requests on that die. When a request is found that it can pass the dependency check, it will be dispatched (move to the NAND request queue).

By updating the row address dependency info, some requests on the target die may be released.

- See also

CheckRowAddrDep().

- Parameters

-

chNo The channel number of the specified die. wayNo The way number of the specified die.

Definition at line 820 of file request_transform.c.

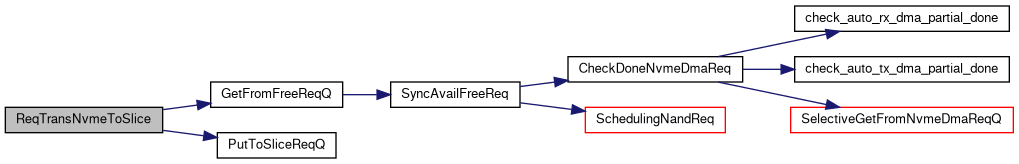

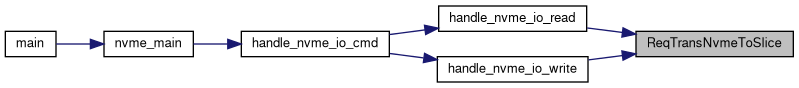

◆ ReqTransNvmeToSlice()

| void ReqTransNvmeToSlice | ( | unsigned int | cmdSlotTag, |

| unsigned int | startLba, | ||

| unsigned int | nlb, | ||

| unsigned int | cmdCode | ||

| ) |

Split NVMe command into slice requests.

- Note

- The unit of the given

startLbaandnlbis NVMe block, not NAND block.

To get the starting LSA of this NVMe command, we need to divide the given startLba by NVME_BLOCKS_PER_SLICE which indicates that how many NVMe blocks can be merged into a slice request.

To get the number of NAND blocks needed by this NVMe command, we should first align the starting NVMe block address startLba to slice 0, then convert the ending NVMe block address (startLba % NVME_BLOCKS_PER_SLICE + requestedNvmeBlock) to LSA, then the result indicates the number of slice requests needed by this NVMe command.

- Note

- Accroding to the NVMe spec, NLB is a 0's based value, so we should increase the

requestedNvmeBlockby 1 to get the real number of NVMe blocks to be read/written by this NVMe command.

Now the address translation part is finished and we can start to split the NVMe command into slice requests. The splitting process can be separated into 3 steps:

Fill the remaining NVMe blocks in first slice request (head)

Since the

startLbamay not perfectly align to the first NVMe block of first slice command, we should access the trailing N NVMe blocks in the first slice request, where N is the number of misaligned NVMe blocks in the first slice requests.Generate slice requests for the aligned NVMe blocks (body)

General case. The number of the NVMe blocks to be filled by these slice requests is exactly

NVME_BLOCKS_PER_SLICE. So here just simply use a loop to generate same slice requests.Generate slice request for the remaining NVMe blocks (tail)

Similar to the first step, but here we need to access the first K NVMe blocks in the last slice request, where K is the number of remaining NVMe blocks in this slice request.

- Todo:

- generalize the three steps

- Parameters

-

cmdSlotTag

- Todo:

- //TODO

- Parameters

-

startLba address of the first logical NVMe block to read/write. nlb number of logical NVMe blocks to read/write. cmdCode opcode of the given NVMe command.

Definition at line 123 of file request_transform.c.

◆ ReqTransSliceToLowLevel()

| void ReqTransSliceToLowLevel | ( | ) |

Data Buffer Manager. Handle all the pending slice requests.

This function will repeat the following steps until all the pending slice requests are consumed:

- Select a slice request from the slice request queue

sliceReqQ. Allocate a data buffer entry for the request and generate flash requests if needed.

- Warning

- Why no need to modify

logicalSliceAddrand generate flash request when buffer hit? data cache hit??

Generate NVMe transfer/receive request for read/write request.

- Warning

- Why mark the data buffer dirty for write request?

- Dispatch the transfer/receive request by calling

SelectLowLevelReqQ().

- Note

- This function is currently only called after

handle_nvme_io_cmd()during the process of handling NVMe I/O commands innvme_main.c.

Definition at line 323 of file request_transform.c.

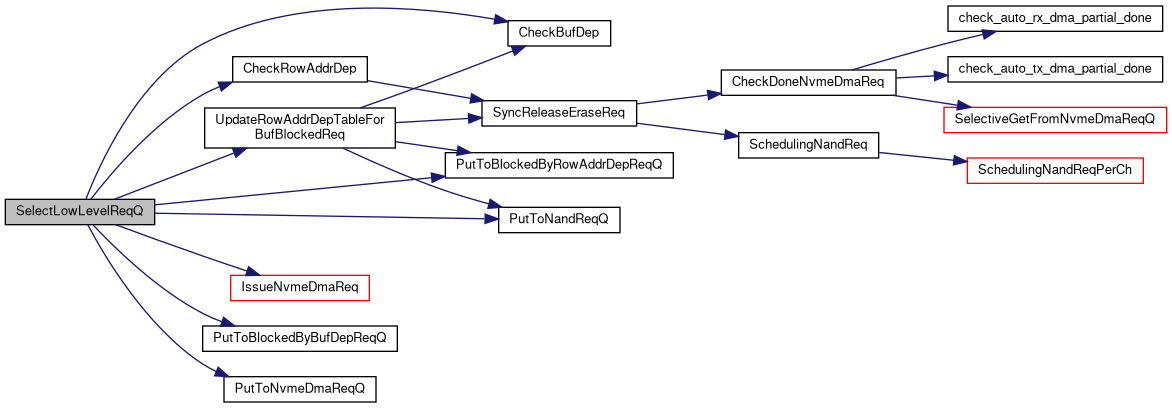

◆ SelectLowLevelReqQ()

| void SelectLowLevelReqQ | ( | unsigned int | reqSlotTag | ) |

Dispatch given NVMe/NAND request to corresponding request queue.

This function is in charge of issuing the given NVMe/NAND request. But before issuing the request, we should first make sure that this request is safe to be issued.

We first need to check whether this request is blocked by any other request that uses the same data buffer (check UpdateDataBufEntryInfoBlockingReq() for details).

- If the request is not blocked by the blocking request queue, we can start issuing the request now, but NVMe/NAND request have different process:

- For a NVNe DMA request (Tx from/to data buffer to/from host), we can just issue the request and wait for completion.

For a NAND request, we must do something before issuing the request:

However, for NAND requests, since there may be some dependency problems between the requests (e.g., ERASE cannot be simply reordered before READ), we must check this kind of dependency problems (called "row address dependency" here) before dispatching the NAND requests by using the function

CheckRowAddrDep().Once it was confirmed to have no row address dependency problem on this request, the request can then be dispatched; otherwise, the request should be blocked and inserted to the row address dependency queue.

- Note

- In current implementation, the address format of the request to be check must be VSA. So, for requests that using the physical address, the check option should be set to

REQ_OPT_ROW_ADDR_DEPENDENCY_CHECK.

If the request is blocked by data buffer dependency

The fw will try to recheck the data buffer dependency problem and release the request if possible, by calling

UpdateRowAddrDepTableForBufBlockedReq(), which is similar toCheckRowAddrDep().

- Parameters

-

reqSlotTag the request pool index of the given request.

Definition at line 634 of file request_transform.c.

Variable Documentation

◆ rowAddrDependencyTablePtr

|

extern |

Definition at line 56 of file request_transform.c.